格式数据产生耗时"/>

格式数据产生耗时"/>

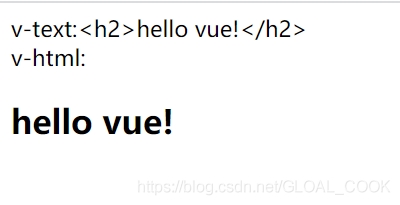

gstreamer的I420转jpeg格式数据产生耗时

1、将I430数据转化为jpg数据

JPegEncoder.h

#pragma once

#include <cstdint>

#include <vector>

#include <functional>

#include <gst/gst.h>

#include <gst/app/gstappsink.h>

#include "Resolution.h"

#include "VideoSettings.h"namespace sinftech {

namespace tv {

class JpegEncoder {

public:using Handler = std::function<void(const uint8_t* data, size_t size, const Resolution& resolution)>;JpegEncoder(Handler&& handler);~JpegEncoder();void encode(const uint8_t* data, size_t size, const Resolution& resolution);void start(const VideoSettings& settings);void stop();private:static GstFlowReturn _onEncoded(GstAppSink *appsink, gpointer user_data);GstElement* _pipeline;GstElement* _source;GstElement* _queue0;GstElement* _convert;GstElement* _scale;GstElement* _rate;GstElement* _capsfilter;GstElement* _queue1;GstElement* _encode;GstElement* _queue2;GstElement* _sink;Handler _handler;

};

}//namespace tv

}//namespace sinftechJPegEncoder.cpp

#include "JpegEncoder.h"

#include <gst/app/gstappsrc.h>

#include <gst/video/video.h>

#include "Check.h"

#include <windows.h>namespace sinftech {

namespace tv {GstFlowReturn JpegEncoder::_onEncoded(GstAppSink *appsink, gpointer user_data) {JpegEncoder* pThis = static_cast<JpegEncoder*>(user_data);GstSample* sample = gst_app_sink_pull_sample(appsink);if (sample != NULL) {GstCaps* caps = gst_sample_get_caps(sample);gint width, height;GstStructure* structure = gst_caps_get_structure(caps, 0);gst_structure_get_int(structure, "width", &width);gst_structure_get_int(structure, "height", &height);GstBuffer* buffer = gst_sample_get_buffer(sample);if (buffer != NULL) {GstMapInfo map;if (gst_buffer_map(buffer, &map, GST_MAP_READ)) {if (pThis->_handler) {pThis->_handler(map.data, map.size, {uint16_t(width), uint16_t(height)});}gst_buffer_unmap(buffer, &map);}}gst_sample_unref(sample);}return GST_FLOW_OK;

}JpegEncoder::JpegEncoder(Handler&& handler): _handler(std::move(handler)) {gst_init(nullptr, nullptr);_pipeline = chk(gst_pipeline_new(nullptr));_source = chk(gst_element_factory_make("appsrc", nullptr));_queue0 = chk(gst_element_factory_make("queue", nullptr));_convert = chk(gst_element_factory_make("videoconvert", nullptr));_scale = chk(gst_element_factory_make("videoscale", nullptr));_rate = chk(gst_element_factory_make("videorate", nullptr));_capsfilter = chk(gst_element_factory_make("capsfilter", nullptr));_queue1 = chk(gst_element_factory_make("queue", nullptr));_encode = chk(gst_element_factory_make("jpegenc", nullptr));_queue2 = chk(gst_element_factory_make("queue", nullptr));_sink = chk(gst_element_factory_make("appsink", nullptr));g_object_set(_source, "is-live", true, "do-timestamp", true, "format", GST_FORMAT_TIME, NULL);g_object_set(_queue0, "leaky", 2, NULL);g_object_set(_capsfilter, "caps-change-mode", 1, NULL);g_object_set(_queue1, "leaky", 2, NULL);g_object_set(_queue2, "leaky", 2, NULL);g_object_set(_sink, "sync", false, "enable-last-sample", false, "drop", true, "max-buffers", 200, "max-lateness", 1000000000ll, NULL);GstAppSinkCallbacks callbacks{nullptr, nullptr, &_onEncoded};gst_app_sink_set_callbacks(GST_APP_SINK(_sink), &callbacks, this, nullptr);gst_bin_add_many(GST_BIN(_pipeline), _source, _queue0, _convert, _scale, _rate, _capsfilter, _queue1, _encode, _queue2, _sink, NULL);gst_element_link_many( _source, _queue0, _convert, _scale, _rate, _capsfilter, _queue1, _encode, _queue2, _sink, NULL);

}JpegEncoder::~JpegEncoder() {gst_element_set_state(_pipeline, GST_STATE_NULL);gst_object_unref(_pipeline);

}void JpegEncoder::start(const VideoSettings& settings) {stop();GstCaps* caps = gst_caps_new_simple("video/x-raw","format", G_TYPE_STRING, "I420","width", G_TYPE_INT, settings.resolution.width,"height", G_TYPE_INT, settings.resolution.height,"framerate", GST_TYPE_FRACTION, settings.fps, 1,NULL);g_object_set(_capsfilter, "caps", caps, NULL);gst_caps_unref(caps);gst_element_set_state(_pipeline, GST_STATE_PLAYING);

}void JpegEncoder::stop() {gst_element_set_state(_pipeline, GST_STATE_NULL);

}void JpegEncoder::encode(const uint8_t* data, size_t size, const Resolution& resolution) {GstState state = GST_STATE_NULL;GstState pending = GST_STATE_NULL;gst_element_get_state(_pipeline, &state, &pending, 0);if (state == GST_STATE_PLAYING || pending == GST_STATE_PLAYING) {GstBuffer* buffer = gst_buffer_new_and_alloc(size);if (buffer != NULL) {GstMapInfo map;if (gst_buffer_map(buffer, &map, GST_MAP_WRITE)) {memcpy(map.data, data, size);gst_buffer_unmap(buffer, &map);GstVideoInfo videoInfo;gst_video_info_set_format(&videoInfo, GST_VIDEO_FORMAT_I420, resolution.width, resolution.height);GstCaps* caps = gst_video_info_to_caps(&videoInfo);GstSample* sample = gst_sample_new(buffer, caps, nullptr, nullptr);gst_caps_unref(caps);if (sample != NULL) {gst_app_src_push_sample(GST_APP_SRC(_source), sample);gst_sample_unref(sample);}}gst_buffer_unref(buffer);}}

}}//namespace tv

}//namespace sinftech测试发现插件rate产生耗时(gstreamer在同步时间产生耗时), 并且一个输入会产生多个输出。耗时解释如下:

gstreamer的videorate插件的耗时取决于多个因素,包括输入视频帧率、输出视频帧率以及系统硬件性能等。在实际使用中,可以通过测试和性能优化来评估具体情况。一般来说,videorate插件的耗时较低,因为它只是简单地调整视频帧的时间戳而不涉及复杂的图像处理操作。但是,如果输入和输出之间的帧率差异很大或者需要进行额外的转码操作,则可能会增加一些延迟。

现修改如下:

#include "JpegEncoder.h"

#include <gst/app/gstappsrc.h>

#include <gst/video/video.h>

#include "Check.h"

#include <windows.h>#include <string>

#include <chrono>

#include <ctime>namespace sinftech {

namespace tv {GstFlowReturn JpegEncoder::_onEncoded(GstAppSink *appsink, gpointer user_data) {JpegEncoder* pThis = static_cast<JpegEncoder*>(user_data);GstSample* sample = gst_app_sink_pull_sample(appsink);if (sample != NULL) {GstCaps* caps = gst_sample_get_caps(sample);gint width, height;GstStructure* structure = gst_caps_get_structure(caps, 0);gst_structure_get_int(structure, "width", &width);gst_structure_get_int(structure, "height", &height);GstBuffer* buffer = gst_sample_get_buffer(sample);if (buffer != NULL) {GstMapInfo map;if (gst_buffer_map(buffer, &map, GST_MAP_READ)) {if (pThis->_handler) {pThis->_handler(map.data, map.size, {uint16_t(width), uint16_t(height)});//pThis->SaveVideoDatToFile(map.data, map.size, 2);}gst_buffer_unmap(buffer, &map);}}gst_sample_unref(sample);}return GST_FLOW_OK;

}JpegEncoder::JpegEncoder(Handler&& handler, bool bEncoderEnable): _handler(std::move(handler)) {m_bEncoderEnable = bEncoderEnable;//gst_debug_set_active(TRUE);//gst_debug_set_default_threshold(GST_LEVEL_WARNING);if (m_bEncoderEnable){gst_init(nullptr, nullptr);_pipeline = chk(gst_pipeline_new(nullptr));_source = chk(gst_element_factory_make("appsrc", nullptr));_queue0 = chk(gst_element_factory_make("queue", nullptr));_convert = chk(gst_element_factory_make("videoconvert", nullptr));_scale = chk(gst_element_factory_make("videoscale", nullptr));_capsfilter = chk(gst_element_factory_make("capsfilter", nullptr));_queue1 = chk(gst_element_factory_make("queue", nullptr));_encode = chk(gst_element_factory_make("jpegenc", nullptr));_queue2 = chk(gst_element_factory_make("queue", nullptr));_sink = chk(gst_element_factory_make("appsink", nullptr));g_object_set(_source, "is-live", true, "do-timestamp", true, "format", GST_FORMAT_TIME, NULL);g_object_set(_queue0, "leaky", 2, NULL);g_object_set(_capsfilter, "caps-change-mode", 1, NULL);g_object_set(_queue1, "leaky", 2, NULL);g_object_set(_queue2, "leaky", 2, NULL);//g_object_set(_sink, "sync", false, "enable-last-sample", false, "drop", true, "max-buffers", 200, "max-lateness", 1000000000ll, NULL);g_object_set(_sink, "sync", true, "enable-last-sample", true, "drop", true, "max-buffers", 1, "max-lateness", 1, NULL);GstAppSinkCallbacks callbacks{ nullptr, nullptr, &_onEncoded };gst_app_sink_set_callbacks(GST_APP_SINK(_sink), &callbacks, this, nullptr);gst_bin_add_many(GST_BIN(_pipeline), _source, _queue0, _convert, _scale, _capsfilter, _queue1, _encode, _queue2, _sink, NULL);gst_element_link_many(_source, _queue0, _convert, _scale, _capsfilter, _queue1, _encode, _queue2, _sink, NULL);}

}JpegEncoder::~JpegEncoder() {if (m_bEncoderEnable){gst_element_set_state(_pipeline, GST_STATE_NULL);gst_object_unref(_pipeline);}

}void JpegEncoder::start(const VideoSettings& settings)

{if (!m_bEncoderEnable)return;if (0 != settings.fps && settings != _settings){_settings = settings;pause();GstCaps* caps = gst_caps_new_simple("video/x-raw","format", G_TYPE_STRING, "I420","width", G_TYPE_INT, settings.resolution.width,"height", G_TYPE_INT, settings.resolution.height,"framerate", GST_TYPE_FRACTION, settings.fps, 1,NULL);g_object_set(_capsfilter, "caps", caps, NULL);gst_caps_unref(caps);gst_element_set_state(_pipeline, GST_STATE_PLAYING);}else{//do nothing}

}void JpegEncoder::pause(void)

{if (!m_bEncoderEnable)return;gst_element_set_state(_pipeline, GST_STATE_NULL);

}void JpegEncoder::stop() {if (!m_bEncoderEnable)return;pause();_settings.fps = 0; //force reset setting

}void JpegEncoder::encode(const uint8_t* data, size_t size, const Resolution& resolution) {if (!m_bEncoderEnable)return;//SaveVideoDatToFile(data, size, 1);GstState state = GST_STATE_NULL;GstState pending = GST_STATE_NULL;gst_element_get_state(_pipeline, &state, &pending, 0);if (state == GST_STATE_PLAYING || pending == GST_STATE_PLAYING) {GstBuffer* buffer = gst_buffer_new_and_alloc(size);if (buffer != NULL) {GstMapInfo map;if (gst_buffer_map(buffer, &map, GST_MAP_WRITE)) {memcpy(map.data, data, size);gst_buffer_unmap(buffer, &map);GstVideoInfo videoInfo;gst_video_info_set_format(&videoInfo, GST_VIDEO_FORMAT_I420, resolution.width, resolution.height);GstCaps* caps = gst_video_info_to_caps(&videoInfo);GstSample* sample = gst_sample_new(buffer, caps, nullptr, nullptr);gst_caps_unref(caps);if (sample != NULL) {gst_app_src_push_sample(GST_APP_SRC(_source), sample);gst_sample_unref(sample);}}gst_buffer_unref(buffer);}}

}std::string JpegEncoder::GetCurFormatTime()

{auto now = std::chrono::system_clock::now();std::time_t time = std::chrono::system_clock::to_time_t(now);std::tm* tm = std::localtime(&time);auto ms = std::chrono::duration_cast<std::chrono::milliseconds>(now.time_since_epoch()).count() % 1000;return std::move(std::to_string(tm->tm_year + 1900)+ "-" + std::to_string(tm->tm_mon + 1)+ "-" + std::to_string(tm->tm_mday)+ "_" + std::to_string(tm->tm_hour)+ "-" + std::to_string(tm->tm_min)+ "-" + std::to_string(tm->tm_sec)+ "." + std::to_string(ms));

}void JpegEncoder::SaveVideoDatToFile(const uint8_t* data, size_t size, int type)

{std::string fileName;if (type == 1){fileName = "input_" + GetCurFormatTime() + ".yuv";}else if (type == 2){fileName = "output_" + GetCurFormatTime() + ".jpg";}FILE* fp = fopen(fileName.c_str(), "wb+");fwrite(data, size, 1, fp);fclose(fp);

}}//namespace tv

}//namespace sinftech2、jpeg数据转化为I420

JPegDecoder.h

#pragma once

#include <cstdint>

#include <vector>

#include <thread>

#include <gst/gst.h>

#include <gst/app/gstappsink.h>

#include "Resolution.h"

#include "VideoSettings.h"namespace sinftech {

namespace tv {

class JpegDecoder {

public:using Handler = std::function<void(const uint8_t* data, size_t size, const Resolution& resolution)>;JpegDecoder(Handler&& handler);~JpegDecoder();void decode(const uint8_t* data, size_t size);private:static GstFlowReturn _onDecoded(GstAppSink *appsink, gpointer user_data);GstElement* _pipeline;GstElement* _source;GstElement* _queue0;GstElement* _decode;GstElement* _queue1;GstElement* _sink;Handler _handler;

};

}//namespace tv

}//namespace sinftechJPegDecoder.cpp

#include "JpegDecoder.h"

#include <gst/app/gstappsrc.h>

#include <gst/video/video.h>

#include "Check.h"

#include <util/Log.h>namespace sinftech {

namespace tv {GstFlowReturn JpegDecoder::_onDecoded(GstAppSink *appsink, gpointer user_data) {JpegDecoder* pThis = static_cast<JpegDecoder*>(user_data);GstSample* sample = gst_app_sink_pull_sample(appsink);if (sample != NULL) {GstCaps* caps = gst_sample_get_caps(sample);gint width, height;GstStructure* structure = gst_caps_get_structure(caps, 0);gst_structure_get_int(structure, "width", &width);gst_structure_get_int(structure, "height", &height);GstBuffer* buffer = gst_sample_get_buffer(sample);if (buffer != NULL) {GstMapInfo map;if (gst_buffer_map(buffer, &map, GST_MAP_READ)) {if (pThis->_handler) {try {pThis->_handler(map.data, map.size, {uint16_t(width), uint16_t(height)});} catch (const std::exception& e) {util::log(std::string("jpeg decoder exception: ") + e.what());throw;} catch (...) {util::log("jpeg decoder exception: unknown");throw;}}gst_buffer_unmap(buffer, &map);}}gst_sample_unref(sample);}return GST_FLOW_OK;

}JpegDecoder::JpegDecoder(Handler&& handler): _handler(std::move(handler)) {gst_init(nullptr, nullptr);_pipeline = chk(gst_pipeline_new(nullptr));_source = chk(gst_element_factory_make("appsrc", nullptr));_queue0 = chk(gst_element_factory_make("queue", nullptr));_decode = chk(gst_element_factory_make("jpegdec", nullptr));_queue1 = chk(gst_element_factory_make("queue", nullptr));_sink = chk(gst_element_factory_make("appsink", nullptr));g_object_set(_source, "is-live", true, "do-timestamp", true, "format", GST_FORMAT_TIME, NULL);g_object_set(_queue0, "leaky", 2, NULL);g_object_set(_queue1, "leaky", 2, NULL);GstCaps* caps = gst_caps_new_simple("video/x-raw", "format", G_TYPE_STRING, "I420", NULL);g_object_set(_sink, "caps", caps, "sync", false, "enable-last-sample", false, "drop", true, "max-buffers", 200, "max-lateness", 1000000000ll, NULL);gst_caps_unref(caps);GstAppSinkCallbacks callbacks{nullptr, nullptr, &_onDecoded};gst_app_sink_set_callbacks(GST_APP_SINK(_sink), &callbacks, this, nullptr);gst_bin_add_many(GST_BIN(_pipeline), _source, _queue0, _decode, _queue1, _sink, NULL);gst_element_link_many( _source, _queue0, _decode, _queue1, _sink, NULL);gst_element_set_state(_pipeline, GST_STATE_PLAYING);

}JpegDecoder::~JpegDecoder() {gst_element_set_state(_pipeline, GST_STATE_NULL);gst_object_unref(_pipeline);

}void JpegDecoder::decode(const uint8_t* data, size_t size) {GstState state = GST_STATE_NULL;GstState pending = GST_STATE_NULL;gst_element_get_state(_pipeline, &state, &pending, 0);if (state == GST_STATE_PLAYING || pending == GST_STATE_PLAYING) {GstBuffer* buffer = gst_buffer_new_and_alloc(size);if (buffer != NULL) {GstMapInfo map;if (gst_buffer_map(buffer, &map, GST_MAP_WRITE)) {memcpy(map.data, data, size);gst_buffer_unmap(buffer, &map);GstCaps* caps = gst_caps_new_simple("image/jpeg", NULL);GstSample* sample = gst_sample_new(buffer, caps, nullptr, nullptr);gst_caps_unref(caps);if (sample != NULL) {gst_app_src_push_sample(GST_APP_SRC(_source), sample);gst_sample_unref(sample);}}gst_buffer_unref(buffer);}}

}}//namespace tv

}//namespace sinftech更多推荐

gstreamer的I420转jpeg格式数据产生耗时

发布评论